Jaeger Notes

1. 前言

Tracing是最近才慢慢开始普及起来的一个概念。现在越来越多的系统转向微服务,或者类似于微服务设计的分布式系统,系统内部的复杂度越来越高,导致观察某一个请求的整个生命周期非常困难,查错也很困难。为了解决这样的问题,Tracing这个需求就被慢慢提出来了。最早的解决方案是一个开源项目被称为:Zipkin。后来CNCF对Tracing做了一个规范:OpenTracing,在CNCF里也孵化了一个项目:Jaeger。现在基本上有这方面需求的,都会使用Jaeger,前浪死在沙滩上啊。

关于监控、追踪、日志三者的区别,可以查看:Compare: Monitoring | Tracing | Logging。

以下所有针对Jaeger的讨论都基于版本:1.11.0。

外部链接:

- 阿里云的一篇博文很不错,可以看下:开放分布式追踪(OpenTracing)入门与 Jaeger 实现

- OpenTracing语义标准规范及实现

2. OpenTracing

OpenTracing在CNCF里有其站点:opentracing.io,这个站点主要是进行一些大概念的介绍,基本上没什么可用的实现,而实现可以看上面提到过的Jaeger,当然本文后面也会介绍。

该站点的文档中,有意义的部分从:OpenTracing Overview开始。文档概念性的部分过完之后,其他部分基本上就可以忽略了。

OpenTracing有官方的Specification:CN汉化版,以及EN原版。既然是SPEC,里面也就包含了所有需要了解的信息,有时间的可以通读一遍。

这里需要注意,SPEC是有明确版本的,当前的版本为:1.1 - 19 Sep 2018。

前一版本为:1.0 - 23 Dec 2016,可以看到版本基本上很稳定。

此外,对于SPEC,有一些语义惯例可以看下。

主要需要遵循的有:

如果应用场景符合上述范例中定义的情况,则务必需要遵循这些规范。这有点类似于写代码的时候的Coding Style,虽然代码编译执行可能没有任何问题,但为了统一编码的可读性还是需要遵循规范。

下文会从组成Tracing的一些概念着手开始逐步介绍。

2.1 Trace

Trace: The description of a transaction as it moves through a distributed system.

Trace:一个在分布式系统内运作的事务。

Trace代表着一个完整的事务,举个例子来说,如果有一个复杂的API系统,内部的API后台由大量的微服务实现,而一个可能需要十来个甚至更多的微服务协同处理的API请求,就是一个Trace。而这个例子中每一个微服务,其所作的工作就可以被认为是一个Span。

2.2 Span

Span: A named, timed operation representing a piece of the workflow. Spans accept key:value tags as well as fine-grained, timestamped, structured logs attached to the particular span instance. Span context: Trace information that accompanies the distributed transaction, including when it passes the service to service over the network or through a message bus. The span context contains the trace identifier, span identifier, and any other data that the tracing system needs to propagate to the downstream service.

Span:一个被命名、被计时,代表着一段工作流的操作。Spans实例接受键值对的tags,以及含有细节的、计时的、结构化的日志内容。

Span context:伴随着分布式事务产生的Trace相关的信息,包含了该事务通过网络或消息总线在服务之间传递的时间。此外还包含了:trace标识符、span标识符,以及其他任何tracing系统需要用来衍生下游服务所需要的数据。

2.2.1 Trace与Span关系

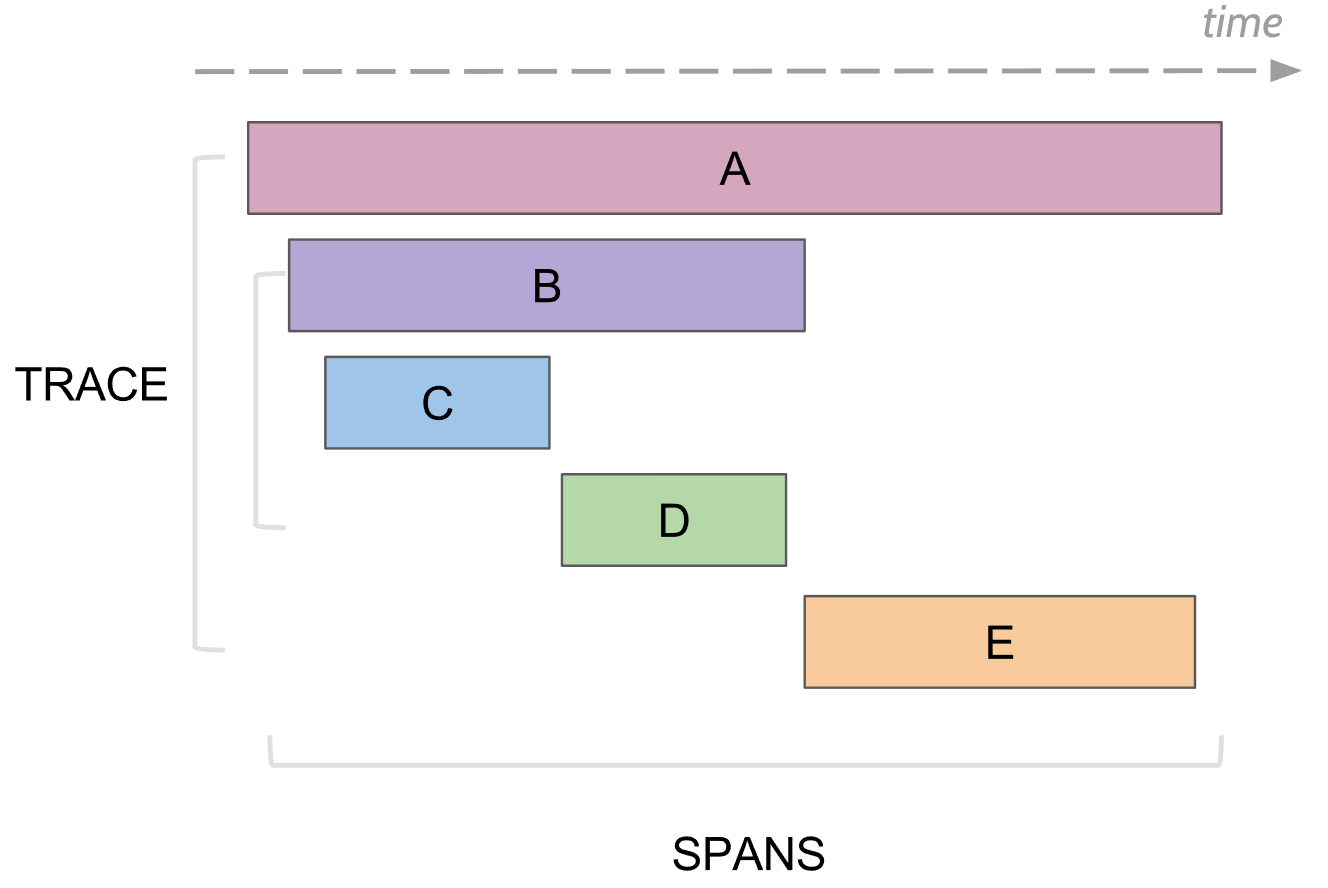

上图是一个Trace和Span的关系范例图。

- 从时间轴上可以看到,A覆盖了所有下面子条目的时间,这很容易理解:所有的Span组成一个Trace,而每个Span实际上都消耗了时间,这些时间的总合就是Trace的时间消耗

- Span有父子级别概念:B和C以及D的关系就是父子,而C和D则不是父子关系,父子的时间是

重合的,就类似所有子Span和Trace之间的时间关系,B消耗的时间是C和D的时间总合,而A消耗的时间则是B和E的总合

图中:

- 在Trace

A中,B和E是A的子Span - 在Trace

A中,B和E顺序执行,E的执行需要等待B的完成 A的消耗时长为B和E的总合(这里都是理想情况)- 在Span

B中,C和D是B的子Span - 在Span

B中,C和D顺序执行,D的执行需要等待C的完成 B的消耗时长为C和D的总合(这里都是理想情况)

2.2.2 Span属性

An operation name,操作名称A start timestamp,起始时间A finish timestamp,结束时间Span Tag,一组键值对构成的Span标签集合。键值对中,键必须为string,值可以是字符串,布尔,或者数字类型Span Log,一组span的日志集合- 每次log操作包含一个键值对,以及一个时间戳

- 键值对中,键必须为string,值可以是任意类型

- 但是需要注意,不是所有的支持OpenTracing的Tracer,都需要支持所有的值类型

SpanContext,Span上下文对象References,Span间关系,相关的零个或者多个Span(Span间通过SpanContext建立这种关系)

Span Tag 范例:

- db.instance:“jdbc:mysql://127.0.0.1:3306/customers”

- db.statement:“SELECT * FROM mytable WHERE foo=‘bar’;”

Span Logs 范例:

- message:“Can’t connect to mysql server on ‘127.0.0.1’(10061)”

2.2.3 Span Context属性

- 任何一个OpenTracing的实现,都需要将当前调用链的状态(例如:trace和span的id),依赖一个独特的Span去跨进程边界传输

- Baggage Items,Trace的随行数据,是一个键值对集合,它存在于trace中,也需要跨进程边界传输

SpanContext 范例:

- trace_id:“abc123”

- span_id:“xyz789”

- Baggage Items:

- special_id:“vsid1738”

2.2.4 Span间关系

一个Span可以与一个或者多个SpanContexts存在因果关系。OpenTracing目前定义了两种关系:

- ChildOf(父子)

- FollowsFrom(跟随)

ChildOf引用:

- 一个RPC调用的服务端的span,和RPC服务客户端的span构成ChildOf关系

- 一个sql insert操作的span,和ORM的save方法的span构成ChildOf关系

- 很多span可以并行工作(或者分布式工作)都可能是一个父级的span的子项,他会合并所有子span的执行结果,并在指定期限内返回

3. Jaeger架构

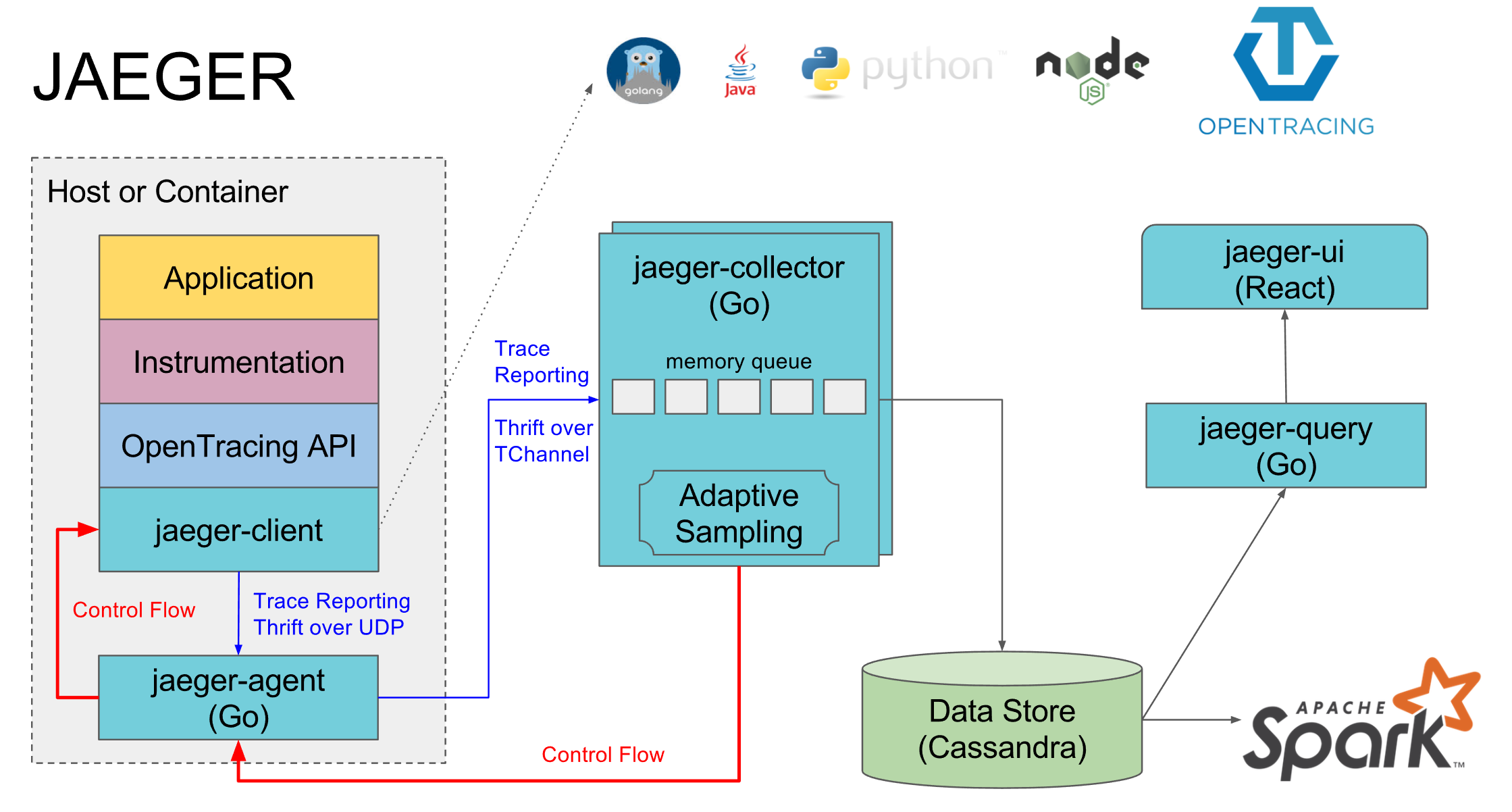

官网上(Architecture)有一张架构图,可以先看下:

图上的内容主要分几个部分,下面一个个说。

3.1 Jaeger Client

Jaeger client是之前提到的OpenTracing API的多语言实现。这部分是嵌在你的应用程序里的一个类库,它就是你的应用程序的一部分,使用它你就可以在收到Request的时候创建对应的Span,并向下游Request输出对应的Trace上下文。

Jaeger Client可以直接向Collector发送Trace信息,也可以结合本地的Agent协同工作,降低性能开销。实际上这部分的性能开销不会很大。

3.2 Jaeger Agent

Jaeger Agent是和目标应用程序并行部署的一个组件,可以理解为边车设计中的边车的组成部分之一。它是一个长期启动的守护进程,通过监听UDP网络接收Client发送过来的Trace信息,并批量向Collector发送。在设计上,Agent可以将路由和服务发现相关的业务从Client里解耦出来。

3.3 Jaeger Collector

Jaeger Collector可以说是Jaeger的服务器,接收从Agent发送过来的Trace信息,并对其进行验证、索引、转换,并最终将其存储起来。

3.4 Data Store

Jaeger的数据存储是可插拔式组件设计,有多种数据后端可选:

- Cassandra

- Elasticsearch

- Kafka (only as a buffer),即并不能把Kafka当做最终存储设施

存储选择是个问题,我简单搜索了下,没有找到非常权威的帖子对这三者进行横向比较,也可能是因为这块的技术比较新发展比较快。就我来看,个人建议是熟悉什么用什么,以不增加系统复杂度为第一要务。猜想:

- Cassandra大概是稳定性最好的,大数据量的选择应该是这个

- 功能上大概是ELK最好,本来它就是以全文搜索为功能设计出来的系统

- 性能上应该是Kafka最高,毕竟Kafka的吞吐量是有口皆碑的(中间通道)

从官方的文档口径上来看,最推荐的应该是使用Kafka和Ingester组成通道,然后使用Cassandra作为存储设备。

3.4.1 ElasticSearch支持

Jaeger最佳支持的后端存储是Cassandra,Elasticsearch是第二个官方支持的存储设施。文档在:ElasticSearch Support。

需要注意的是,当前版本1.11.x是不支持Elasticsearch最新版本7.x的:Support Elasticsearch 7.x #1474,而且看情况短期内应该也不会支持。后面我做practice的时候就直接选择cassandra作为存储了,毕竟存储还是安全第一。

3.5 Jaeger Query

Jaeger的查询引擎,UI发送查询请求到该组件,然后查询引擎会查询后台数据仓库,返回结果。该组件启动的时候已经附带启动了HTTP的UI界面。

3.6 Jaeger Ingester

组件上还有个Ingester,但基本上没找到什么相关信息。官方介绍:

Ingester is a service that reads from Kafka topic and writes to another storage backend (Cassandra, Elasticsearch).

感觉应该是一个解决吞吐量的中间件。

3.7 日志模式

按照Jaeger当前的设计,Client和Agent之间是使用UDP进行通讯,必须是实时连接的,这就对Agent的健壮性提出了要求,如果当前Agent已挂,Client就基本上不可用了。而日志文件化的好处就是将Client和Agent彻底解耦,使用操作系统的磁盘IO来解决Tracing生成的问题,而这方面,官方并没有计划要这么做,没有任何关于这方面的设计讨论(至少我没找到)。

而官方倒是有讨论:如果有一个外部存储已经存放了Tracing相关数据,能不能直接使用Query连接上去进行Tracing数据的展示和分析。见:

如果这个实现的话,实际上就完全绕过了Jaeger的整个生命周期:

- 不再需要在应用程序中嵌入Jaeger的Client(直接使用日志组件将Tracing打成日志)

- 不再需要在边车中放置Jaeger的Agent(只要有日志聚合的Agent即可:Logstash、Filebeat)

- 不再需要启动Collector集群(直接由日志聚合Agent发送到Elaticsearch)

只需要直接沿用(如果在用的话)ELK系列日志聚合系统,然后用Jaeger的Query直接连接到Elasticsearch上进行Tracing对应的日志分析就可以了。这样即减少了系统的整体复杂度(不需要Jaeger的一整套东西了),又降低了系统整体负载(磁盘IO永远是最便宜的东西)。

就看今后发展了。不过如果这真的实现了,Jaeger项目还有必要存在么。。。

4. Jaeger使用

初步入门可以查看官方文档:Getting started。实际生产环境的安装和部署,需要仔细阅读官方文档:Deployment。

如果使用all-in-one范例镜像的话,可以:

$ docker run -d --name jaeger \

-e COLLECTOR_ZIPKIN_HTTP_PORT=9411 \

-p 5775:5775/udp \

-p 6831:6831/udp \

-p 6832:6832/udp \

-p 5778:5778 \

-p 16686:16686 \

-p 14268:14268 \

-p 9411:9411 \

jaegertracing/all-in-one:1.11.0这里主要要了解下一堆端口:

| Port | Protocol | Component | Function |

|---|---|---|---|

| 5775 | UDP | agent | accept zipkin.thrift over compact thrift protocol (deprecated, used by legacy clients only) |

| 6831 | UDP | agent | accept jaeger.thrift over compact thrift protocol |

| 6832 | UDP | agent | accept jaeger.thrift over binary thrift protocol |

| 5778 | HTTP | agent | serve configs |

| 16686 | HTTP | query | serve frontend |

| 16687 | HTTP | query | The http port for the health check service |

| 14267 | TChannel | collector | The TChannel port for the collector service |

| 14268 | HTTP | collector | accept jaeger.thrift directly from clients |

| 14269 | HTTP | collector | The http port for the health check service |

| 14250 | gRPC | collector | accept model.proto |

| 9411 | HTTP | collector | Zipkin compatible endpoint (optional) |

部分补充:

Getting started范例的官方文档里缺一个端口14267。这个端口的Protocol被描述成TChannel,可搜索文档Deployment:

$ docker run \

-e SPAN_STORAGE_TYPE=memory \

jaegertracing/jaeger-collector:1.11.0 \

--help

...

--collector.port int The TChannel port for the collector service (default 14267)

...此外,端口14250的说明也不够清楚,实际上它的Protocol是gRPC,也就是HTTP2:

...

--collector.grpc-port int The gRPC port for the collector service (default 14250)

...以及,14268:

...

--collector.http-port int The HTTP port for the collector service (default 14268)

...4.1 Jaeger Collector单独启动

$ docker run -d --name jaeger-collector \

-e SPAN_STORAGE_TYPE=memory \

-p 14267:14267 \

-p 14268:14268 \

-p 14250:14250 \

jaegertracing/jaeger-collector:1.11.04.2 Jaeger Agent单独启动

$ docker run -d --name jaeger-agent \

--link jaeger-collector \

-p 5775:5775/udp \

-p 6831:6831/udp \

-p 6832:6832/udp \

-p 5778:5778 \

jaegertracing/jaeger-agent:1.11.0 \

--reporter.type=grpc \

--reporter.grpc.host-port=jaeger-collector:142504.3 Jaeger Query单独启动

$ docker run -d --name jaeger-query \

--link jaeger-collector \

-e SPAN_STORAGE_TYPE=memory \

-p 16686:16686 \

jaegertracing/jaeger-query:1.11.05. Jaeger高可用

5.1 Metrics

Jaeger也兼容Prometheus格式的指标输出,有两个命令行参数:

--metrics-backend:metrics暴露的格式,默认为prometheus,expvar可选--metrics-http-route:metrics暴露的endpoint,默认为/metrics

此外,几个端口需要了解下:

| Component | Port |

|---|---|

| jaeger-agent | 5778 |

| jaeger-collector | 14268 |

| jaeger-query | 16686 |

| jaeger-ingester | 14271 |

范例都在本文下面的资料部分:

- jaeger-agent

- jaeger-collector

- jaeger-query

- jaeger-ingester(这块启动需要的依赖太重,暂时没做尝试)

5.2 Components

先说结论,Jaeger在高可用这块的设计和实现基本上是空白,很可能在某些极端情况下丢失Tracing的数据。

5.2.1 Client

Client因为只是应用程序的Lib部分,因此不涉及到任何高可用的概念。

5.2.2 Agent

Agent部分就只是一个转发机关,我查了下对应的文档和网络没找到高可用的设计,此外错误处理基本上也是没有的。也就是说,如果Agent出问题,所有当前未发送缓存中的数据都会丢失,且从Client传输到Agent的数据也会丢失。

见:

- What happens when the jaeger-agent is down on a machine? #1255

- Jaeger Agent does not buffer spans when the Collector is unavailable #1430

5.2.3 Collector

The collectors are stateless and thus many instances of jaeger-collector can be run in parallel.

这和我一开始的理解有点不同。我之前认为对同个Trace的多个子Span的识别和清洗过滤等行为是在Collector这个环节处理的,但既然官方文档都说明Collector是无状态的了,那就不是了。Collector应该只负责简单的Validation然后就直接存储到后台的Data Store里了。

如果对Agent配置了以,分隔的多台无状态Collectors,而其中某台Down了会发生什么,后面需要做测试,按现在Jaeger对错误处理和可用性的支持来看,估计就是直接丢数据了。

同个Trace的多个组成部分Span是否都到达,是否缺失了一部分,看来是没有任何处理和保证的。这也就是说:可能会有部分Trace在UI中可以查到,但其中组成部分的Spans是不完整的,部分缺失的。说实在的,这还蛮致命的。

5.2.4 Data Store

这块就完全由选择的后台数据存储设施自己负责了,Cassandra是出了名的好用,Kafka在扩展上也不错,但Kafka仅只能作为中间通道使用,官方文档描述为:kafka (only as a buffer)。

6. Jaeger采样

在Compare: Monitoring | Tracing | Logging里我已经提到过,tracing是一件频度相当高,且数据量很庞大的工作,因此其性能很可能有问题。Jaeger给了一个新的思路,就是按规则采样,而不是将所有发生的事件全部都发送并存储。

这里需要说明的是采样的决策。如果一个链路很长的Trace,其中每一个Span都有权决定当前信息是否需要被采样,那么你就永远看不到一个完整的Trace了。组成Trace的A、B、C,Span A决定采样,B决定不采样,C决定采样,结果Trace就丢失了B部分数据。

Jaeger采样的决策是在链路的第一节点就决定好了的,当前的Trace是否需要被采样,该决策做出之后,后续整个链路中所有的Span都需要按整个决策执行。这样就不会丢失数据了。

见:Sampling。

The sampling decision will be propagated with the requests to B and to C, so those services will not be making the sampling decision again but instead will respect the decision made by the top service A. This approach guarantees that if a trace is sampled, all its spans will be recorded in the backend. If each service was making its own sampling decision we would rarely get complete traces in the backend.

关于采样的策略,官方也看到了不少问题,后续有一个正在制作中的功能Adaptive Sampling,具体的问题和设计可以看:Adaptive Sampling #365。简单来说就是可以按更复杂的策略进行采样的决策。

6.1 Client配置

最基础的策略,采样是按Client的配置进行执行的,记住,这种情况下采样是在客户端做的,而不是服务器,而且每个客户端都可以做不同的策略。

此外,光阅读文档可能会理解不全,可以查看issue来加深理解:

6.1.1 Constant

常量决策。

- 类型:

sampler.type=const - 值:

sampler.param=1:全部采样sampler.param=0:全部丢弃

6.1.2 Probabilistic

概率决策。

- 类型:

sampler.type=probabilistic - 值:

sampler.param=0.1表示 采样 1/10

6.1.3 Rate Limiting

频次策略。

- 类型:

sampler.type=ratelimiting - 值:

sampler.param=2.0表示 每秒 采样2次

6.1.4 Remote

远端决策。

- 类型:

sampler.type=remote

这种情况下,Client会把请求发到Agent,而Agent则会进行判断是否进行采样。实际的配置则是放在Collector上,参见下面的6.2。

sampler consults Jaeger agent for the appropriate sampling strategy to use in the current service.

6.2 Collector配置

官方文档在:Collector Sampling Configuration。

在Client采样策略配置为:sampler.type=remote的情况下,Agent会向Collector进行采样策略询问,这部分配置是放在Collector上的。需要在Collector启动的时候指定配置文件:--sampling.strategies-file。见:jaeger-collector memory —help。

This option requires a path to a json file which have the sampling strategies defined.

{

"service_strategies": [

{

"service": "foo",

"type": "probabilistic",

"param": 0.8,

"operation_strategies": [

{

"operation": "op1",

"type": "probabilistic",

"param": 0.2

},

{

"operation": "op2",

"type": "probabilistic",

"param": 0.4

}

]

},

{

"service": "bar",

"type": "ratelimiting",

"param": 5

}

],

"default_strategy": {

"type": "probabilistic",

"param": 0.5

}

}service_strategies定义了服务级别的采样策略,operation_strategies则定义了操作级别的采样策略。而实施的采样策略有两种可能:概率策略和频次策略(注意:操作级别的采样策略 不支持 频次策略)。default_strategy定义了上述定义之外的情况下的采样策略。

在上面的例子中:

- 所有的

foo服务,都按0.8的概率策略进行采样 op1和op2虽然属于foo服务,但因为它们各自有自己的配置,因此按自己的配置实施,分别是0.2和0.4的概率策略- 所有的

bar服务,都按每分钟5次的频次策略进行采样 - 除此之外的所有都按0.5的概率频次进行采样

7. Jaeger监控

也许是因为项目太年轻了,在监控实践的过程中实在是很难找到资料,无论是metrics指标的说明文档,还是实际操作起来的Grafana Dashboard,基本上都是空白。大量工作只能自己手动实践解决。

7.1 Exporter

Jaeger应用官方直接带了metrics输出,这方面就不需要找第三方的Exporter了。

端口需要简单说明下。metrics输出端口都是各服务的HTTP端口,也就是说,默认:

- jaeger-agent:5778

- jaeger-collector:14268

关于输出的metrics的说明没有找到对应的文档,官方有一个open的issue表示该工作在进行中:Is there a document to explain the specific meaning of various metrics? #1224,至少近期是没指望了。

查找代码可以找到部分指标的简单说明:jaeger/cmd/collector/app/metrics.go。

7.2 Grafana Dashboard

在Grafana Labs简单查找了下,没有找到可以直接使用的Dashboard。确实能搜到几个Dashboard,但时间都比较久远,Jaeger的部分metrics已经改名了,导致这些Dashboard都无法正常使用,因此一般需要手动自制Dashboard。

官方有意向提供开箱即用的Dashboard,阅读下这个issue:Could we provide pre-made Grafana dashboards for Jaeger backend components? #789,但一样短期内不用指望了。

Issue中提到的jsonnet-libs/jaeger-mixin/倒是可以花点时间简单过一下。虽然不能直接使用,但对自制Dashboard有点指导帮助。

8. Docker集群实践

8.1 Docker集群配置

配置相对来说比较简单,范例地址:dist-system-practice/conf/dev/jaeger-cluster.yaml。

主要搞清楚端口号就行了,jaeger这块开的端口实在是太多了,容易搞混。不过实际上用得多的也就那几个。

8.2 Storage Schema

Jaeger支持的后端主要是Cassandra,对Elasticsearch的支持有,但只是二等的(版本更新、支持、响应都比Cassandra慢),所以如果可能,尽量使用Cassandra。

实验项目中使用的后端存储为Cassandra,版本为:3.11.4。

Jaeger在使用之前,必须对后端存储设施进行Schema的初始化,也就是说要在数据库当中创建表才能使用,下文主要讲述Cassandra作为后端的使用经验,Elasticsearch相关请查阅官方文档:ElasticSearch Support。

使用Cassandra作为后端,其Schema相关的导入代码存放在:jaeger/plugin/storage/cassandra/schema/,虽然路径是plugin,但实际上是可以单独使用的。

路径中存在两份*.cql.tmpl,这实际上就是真正导入的SQL,如果不希望使用bash脚本工具,直接使用也是可以的,当然也需要像bash中的内容一样做一些准备和调整。关于cql相关,可以查看文档:

8.2.1 使用cqlsh导入

不推荐直接使用cqlsh这种方法,下面的7.2.2有更好的方法。

$ docker run -it --rm \

--network dist_net \

cassandra:3.11.4 \

cqlsh \

${host} ${port} \

-u cassandra -p cassandra \

-f ${file_path}关于Cassandra的端口号,可以查看:Configuring firewall port access。cqlsh连接用的端口号为client用端口号,即:9042。

默认的用户名和密码:

The default user is cassandra. The default password is cassandra. 8.2.2 工具镜像导入

实际上官方有一个docker镜像帮忙协助进行schema的创建,但你直接去看这个镜像你根本就不会知道这个镜像是干嘛的,因为什么文档都没有。官方的文档中也没提到过这个镜像,我是在google的时候搜到jaeger的维护者中某一个人的博客的时候才找到的,哎。。。只能说,怎么说呢,这文档工作。。。jaeger已经算是好的了,实际上。

镜像:jaegertracing/jaeger-cassandra-schema。使用方法可以查看博客里的范例:Jaeger and multitenancy。

例子已经比较老了,修改如下:

$ docker run --rm \

--name jaeger-cassandra-schema \

--network dist_net \

-e MODE=test \

-e CQLSH_HOST=cas_1 \

-e KEYSPACE=jaeger_keyspace \

jaegertracing/jaeger-cassandra-schema:1.11.0KEYSPACE=jaeger_keyspace这个名字一定要记住,后面在架设集群的时候是需要提供给collector的。

具体镜像做了什么,以及这个镜像可用的环境变量设置,可以查看:jaeger/plugin/storage/cassandra/schema/docker.sh。

其实这些环境变量最后还是落实到执行的脚本create.sh里,所以需要查看环境变量的作用以及脚本做了什么,可以查看:jaeger/plugin/storage/cassandra/schema/create.sh。

8.3 Opentracing Coding Tutorial

Opentracing Go官方是有Tutorial的:OpenTracing Tutorial - Go。一样在官方文档里只字未提。

这个tutorial对新了解opentracing的人,以及对需要学习opentracing client编码的人都很有帮助。范例举得相当不错。

8.4 Client Coding

Jaeger集群架设完毕还不算结束,后面还需要编写客户端的集成代码。Opentracing的整体流程,以及trace、span等概念,首先需要阅读官方文档,以及7.3中提到的tutorial。

这里要提一点,jaeger免不了会遇到rpc调用之间的上下文跨实体的情况,遇到这种情况,需要上游将trace相关信息inject到请求中,然后在下游将trace信息extract出来,根据extract的信息继续走之前的trace,这样才能保证跨实体的请求是在同一个trace中。相关细节可以查看7.3的tutorial中的第三节课。

客户端代码怎么写,看下下面的文档基本上就了解了。

- Opentracing官方文档:Quick Start

- gRPC Interceptor插件,官方:go-grpc-middleware/tracing/opentracing/

- gRPC非官方插件:grpc-opentracing/go/otgrpc/

对于一般的客户端使用看下官方文档就没什么问题了,gRPC稍微有点不同,官方提供了interceptor插件。至于这些插件怎么使用,可以查看范例:

9. TODO

囿于当前使用的深度及项目调研的时间限制,有部分内容做的还不够深入,后续可以考虑补全:

- Jaeger自身的几项Metrics深入研究:Agent、Collector、Query

- Jaeger的Benchmark

- 特别需要关注Jaeger在高负载情况下的数据丢失情况

- 后端Data Store的选择问题

- 和gin混用的情况下,gRPC客户端的interceptor不会生效(服务端正常),很奇怪,需要后续debug

资料

链接

- 开放分布式追踪(OpenTracing)入门与 Jaeger 实现

- OpenTracing语义标准规范及实现

- The life of a span

- What happens when the jaeger-agent is down on a machine? #1255

- Jaeger Agent does not buffer spans when the Collector is unavailable #1430

- Adaptive Sampling #365

- How sampler.type=remote works #832

- Integration with external logs storage when displaying traces #649

- The Cassandra Query Language (CQL)

- Starting cqlsh

- Configuring firewall port access

- jaegertracing/jaeger-cassandra-schema

- Jaeger and multitenancy

- OpenTracing Tutorial - Go

- grpc-opentracing/go/otgrpc/

Help

jaeger-agent —help

$ docker run --rm jaegertracing/jaeger-agent:1.11.0 --help

Jaeger agent is a daemon program that runs on every host and receives tracing data submitted by Jaeger client libraries.

Usage:

jaeger-agent [flags]

jaeger-agent [command]

Available Commands:

help Help about any command

version Print the version

Flags:

--collector.host-port string Deprecated; comma-separated string representing host:ports of a static list of collectors to connect to directly (e.g. when not using service discovery)

--config-file string Configuration file in JSON, TOML, YAML, HCL, or Java properties formats (default none). See spf13/viper for precedence.

--discovery.conn-check-timeout duration Deprecated; sets the timeout used when establishing new connections (default 250ms)

--discovery.min-peers int Deprecated; if using service discovery, the min number of connections to maintain to the backend (default 3)

-h, --help help for jaeger-agent

--http-server.host-port string host:port of the http server (e.g. for /sampling point and /baggageRestrictions endpoint) (default ":5778")

--log-level string Minimal allowed log Level. For more levels see https://github.com/uber-go/zap (default "info")

--metrics-backend string Defines which metrics backend to use for metrics reporting: expvar, prometheus, none (default "prometheus")

--metrics-http-route string Defines the route of HTTP endpoint for metrics backends that support scraping (default "/metrics")

--processor.jaeger-binary.server-host-port string host:port for the UDP server (default ":6832")

--processor.jaeger-binary.server-max-packet-size int max packet size for the UDP server (default 65000)

--processor.jaeger-binary.server-queue-size int length of the queue for the UDP server (default 1000)

--processor.jaeger-binary.workers int how many workers the processor should run (default 10)

--processor.jaeger-compact.server-host-port string host:port for the UDP server (default ":6831")

--processor.jaeger-compact.server-max-packet-size int max packet size for the UDP server (default 65000)

--processor.jaeger-compact.server-queue-size int length of the queue for the UDP server (default 1000)

--processor.jaeger-compact.workers int how many workers the processor should run (default 10)

--processor.zipkin-compact.server-host-port string host:port for the UDP server (default ":5775")

--processor.zipkin-compact.server-max-packet-size int max packet size for the UDP server (default 65000)

--processor.zipkin-compact.server-queue-size int length of the queue for the UDP server (default 1000)

--processor.zipkin-compact.workers int how many workers the processor should run (default 10)

--reporter.grpc.host-port string Comma-separated string representing host:port of a static list of collectors to connect to directly.

--reporter.grpc.retry.max uint Sets the maximum number of retries for a call. (default 3)

--reporter.grpc.tls Enable TLS.

--reporter.grpc.tls.ca string Path to a TLS CA file. (default use the systems truststore)

--reporter.grpc.tls.server-name string Override the TLS server name.

--reporter.tchannel.discovery.conn-check-timeout duration sets the timeout used when establishing new connections (default 250ms)

--reporter.tchannel.discovery.min-peers int if using service discovery, the min number of connections to maintain to the backend (default 3)

--reporter.tchannel.host-port string comma-separated string representing host:ports of a static list of collectors to connect to directly (e.g. when not using service discovery)

--reporter.tchannel.report-timeout duration sets the timeout used when reporting spans (default 1s)

--reporter.type string Reporter type to use e.g. grpc, tchannel (default "grpc")

Use "jaeger-agent [command] --help" for more information about a command.jaeger-collector memory —help

$ docker run --rm -e SPAN_STORAGE_TYPE=memory jaegertracing/jaeger-collector:1.11.0 --help

Jaeger collector receives traces from Jaeger agents and runs them through a processing pipeline.

Usage:

jaeger-collector [flags]

jaeger-collector [command]

Available Commands:

env Help about environment variables

help Help about any command

version Print the version

Flags:

--collector.grpc-port int The gRPC port for the collector service (default 14250)

--collector.grpc.tls Enable TLS

--collector.grpc.tls.cert string Path to TLS certificate file

--collector.grpc.tls.key string Path to TLS key file

--collector.http-port int The HTTP port for the collector service (default 14268)

--collector.num-workers int The number of workers pulling items from the queue (default 50)

--collector.port int The TChannel port for the collector service (default 14267)

--collector.queue-size int The queue size of the collector (default 2000)

--collector.zipkin.http-port int The HTTP port for the Zipkin collector service e.g. 9411

--config-file string Configuration file in JSON, TOML, YAML, HCL, or Java properties formats (default none). See spf13/viper for precedence.

--health-check-http-port int The http port for the health check service (default 14269)

-h, --help help for jaeger-collector

--log-level string Minimal allowed log Level. For more levels see https://github.com/uber-go/zap (default "info")

--memory.max-traces int The maximum amount of traces to store in memory

--metrics-backend string Defines which metrics backend to use for metrics reporting: expvar, prometheus, none (default "prometheus")

--metrics-http-route string Defines the route of HTTP endpoint for metrics backends that support scraping (default "/metrics")

--sampling.strategies-file string The path for the sampling strategies file in JSON format. See sampling documentation to see format of the file

--span-storage.type string Deprecated; please use SPAN_STORAGE_TYPE environment variable. Run this binary with "env" command for help.

Use "jaeger-collector [command] --help" for more information about a command.jaeger-query —help

$ docker run --rm jaegertracing/jaeger-query:1.11.0 --help

Jaeger query service provides a Web UI and an API for accessing trace data.

Usage:

jaeger-query [flags]

jaeger-query [command]

Available Commands:

env Help about environment variables

help Help about any command

version Print the version

Flags:

--cassandra...

--config-file string Configuration file in JSON, TOML, YAML, HCL, or Java properties formats (default none). See spf13/viper for precedence.

--health-check-http-port int The http port for the health check service (default 16687)

-h, --help help for jaeger-query

--log-level string Minimal allowed log Level. For more levels see https://github.com/uber-go/zap (default "info")

--metrics-backend string Defines which metrics backend to use for metrics reporting: expvar, prometheus, none (default "prometheus")

--metrics-http-route string Defines the route of HTTP endpoint for metrics backends that support scraping (default "/metrics")

--query.base-path string The base path for all HTTP routes, e.g. /jaeger; useful when running behind a reverse proxy (default "/")

--query.port int The port for the query service (default 16686)

--query.static-files string The directory path override for the static assets for the UI

--query.ui-config string The path to the UI configuration file in JSON format

--span-storage.type string Deprecated; please use SPAN_STORAGE_TYPE environment variable. Run this binary with "env" command for help.

Use "jaeger-query [command] --help" for more information about a command.Metrics

jaeger-agent metrics

# HELP go_gc_duration_seconds A summary of the GC invocation durations.

# TYPE go_gc_duration_seconds summary

...

# HELP jaeger_agent_collector_proxy_total collector-proxy

# TYPE jaeger_agent_collector_proxy_total counter

jaeger_agent_collector_proxy_total{endpoint="baggage",protocol="grpc",result="err"} 0

jaeger_agent_collector_proxy_total{endpoint="baggage",protocol="grpc",result="ok"} 0

jaeger_agent_collector_proxy_total{endpoint="sampling",protocol="grpc",result="err"} 0

jaeger_agent_collector_proxy_total{endpoint="sampling",protocol="grpc",result="ok"} 0

# HELP jaeger_agent_http_server_errors_total http-server.errors

# TYPE jaeger_agent_http_server_errors_total counter

jaeger_agent_http_server_errors_total{source="all",status="4xx"} 0

jaeger_agent_http_server_errors_total{source="collector-proxy",status="5xx"} 0

jaeger_agent_http_server_errors_total{source="thrift",status="5xx"} 0

jaeger_agent_http_server_errors_total{source="write",status="5xx"} 0

# HELP jaeger_agent_http_server_requests_total http-server.requests

# TYPE jaeger_agent_http_server_requests_total counter

jaeger_agent_http_server_requests_total{type="baggage"} 0

jaeger_agent_http_server_requests_total{type="sampling"} 0

jaeger_agent_http_server_requests_total{type="sampling-legacy"} 0

# HELP jaeger_agent_reporter_batch_size batch_size

# TYPE jaeger_agent_reporter_batch_size gauge

jaeger_agent_reporter_batch_size{format="jaeger",protocol="grpc"} 0

jaeger_agent_reporter_batch_size{format="zipkin",protocol="grpc"} 0

# HELP jaeger_agent_reporter_batches_failures_total batches.failures

# TYPE jaeger_agent_reporter_batches_failures_total counter

jaeger_agent_reporter_batches_failures_total{format="jaeger",protocol="grpc"} 0

jaeger_agent_reporter_batches_failures_total{format="zipkin",protocol="grpc"} 0

# HELP jaeger_agent_reporter_batches_submitted_total batches.submitted

# TYPE jaeger_agent_reporter_batches_submitted_total counter

jaeger_agent_reporter_batches_submitted_total{format="jaeger",protocol="grpc"} 0

jaeger_agent_reporter_batches_submitted_total{format="zipkin",protocol="grpc"} 0

# HELP jaeger_agent_reporter_spans_failures_total spans.failures

# TYPE jaeger_agent_reporter_spans_failures_total counter

jaeger_agent_reporter_spans_failures_total{format="jaeger",protocol="grpc"} 0

jaeger_agent_reporter_spans_failures_total{format="zipkin",protocol="grpc"} 0

# HELP jaeger_agent_reporter_spans_submitted_total spans.submitted

# TYPE jaeger_agent_reporter_spans_submitted_total counter

jaeger_agent_reporter_spans_submitted_total{format="jaeger",protocol="grpc"} 0

jaeger_agent_reporter_spans_submitted_total{format="zipkin",protocol="grpc"} 0

# HELP jaeger_agent_thrift_udp_server_packet_size thrift.udp.server.packet_size

# TYPE jaeger_agent_thrift_udp_server_packet_size gauge

jaeger_agent_thrift_udp_server_packet_size{model="jaeger",protocol="binary"} 0

jaeger_agent_thrift_udp_server_packet_size{model="jaeger",protocol="compact"} 0

jaeger_agent_thrift_udp_server_packet_size{model="zipkin",protocol="compact"} 0

# HELP jaeger_agent_thrift_udp_server_packets_dropped_total thrift.udp.server.packets.dropped

# TYPE jaeger_agent_thrift_udp_server_packets_dropped_total counter

jaeger_agent_thrift_udp_server_packets_dropped_total{model="jaeger",protocol="binary"} 0

jaeger_agent_thrift_udp_server_packets_dropped_total{model="jaeger",protocol="compact"} 0

jaeger_agent_thrift_udp_server_packets_dropped_total{model="zipkin",protocol="compact"} 0

# HELP jaeger_agent_thrift_udp_server_packets_processed_total thrift.udp.server.packets.processed

# TYPE jaeger_agent_thrift_udp_server_packets_processed_total counter

jaeger_agent_thrift_udp_server_packets_processed_total{model="jaeger",protocol="binary"} 0

jaeger_agent_thrift_udp_server_packets_processed_total{model="jaeger",protocol="compact"} 0

jaeger_agent_thrift_udp_server_packets_processed_total{model="zipkin",protocol="compact"} 0

# HELP jaeger_agent_thrift_udp_server_queue_size thrift.udp.server.queue_size

# TYPE jaeger_agent_thrift_udp_server_queue_size gauge

jaeger_agent_thrift_udp_server_queue_size{model="jaeger",protocol="binary"} 0

jaeger_agent_thrift_udp_server_queue_size{model="jaeger",protocol="compact"} 0

jaeger_agent_thrift_udp_server_queue_size{model="zipkin",protocol="compact"} 0

# HELP jaeger_agent_thrift_udp_server_read_errors_total thrift.udp.server.read.errors

# TYPE jaeger_agent_thrift_udp_server_read_errors_total counter

jaeger_agent_thrift_udp_server_read_errors_total{model="jaeger",protocol="binary"} 0

jaeger_agent_thrift_udp_server_read_errors_total{model="jaeger",protocol="compact"} 0

jaeger_agent_thrift_udp_server_read_errors_total{model="zipkin",protocol="compact"} 0

# HELP jaeger_agent_thrift_udp_t_processor_close_time thrift.udp.t-processor.close-time

# TYPE jaeger_agent_thrift_udp_t_processor_close_time histogram

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="binary",le="0.005"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="binary",le="0.01"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="binary",le="0.025"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="binary",le="0.05"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="binary",le="0.1"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="binary",le="0.25"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="binary",le="0.5"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="binary",le="1"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="binary",le="2.5"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="binary",le="5"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="binary",le="10"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="binary",le="+Inf"} 0

jaeger_agent_thrift_udp_t_processor_close_time_sum{model="jaeger",protocol="binary"} 0

jaeger_agent_thrift_udp_t_processor_close_time_count{model="jaeger",protocol="binary"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="compact",le="0.005"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="compact",le="0.01"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="compact",le="0.025"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="compact",le="0.05"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="compact",le="0.1"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="compact",le="0.25"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="compact",le="0.5"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="compact",le="1"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="compact",le="2.5"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="compact",le="5"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="compact",le="10"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="jaeger",protocol="compact",le="+Inf"} 0

jaeger_agent_thrift_udp_t_processor_close_time_sum{model="jaeger",protocol="compact"} 0

jaeger_agent_thrift_udp_t_processor_close_time_count{model="jaeger",protocol="compact"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="zipkin",protocol="compact",le="0.005"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="zipkin",protocol="compact",le="0.01"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="zipkin",protocol="compact",le="0.025"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="zipkin",protocol="compact",le="0.05"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="zipkin",protocol="compact",le="0.1"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="zipkin",protocol="compact",le="0.25"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="zipkin",protocol="compact",le="0.5"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="zipkin",protocol="compact",le="1"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="zipkin",protocol="compact",le="2.5"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="zipkin",protocol="compact",le="5"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="zipkin",protocol="compact",le="10"} 0

jaeger_agent_thrift_udp_t_processor_close_time_bucket{model="zipkin",protocol="compact",le="+Inf"} 0

jaeger_agent_thrift_udp_t_processor_close_time_sum{model="zipkin",protocol="compact"} 0

jaeger_agent_thrift_udp_t_processor_close_time_count{model="zipkin",protocol="compact"} 0

# HELP jaeger_agent_thrift_udp_t_processor_handler_errors_total thrift.udp.t-processor.handler-errors

# TYPE jaeger_agent_thrift_udp_t_processor_handler_errors_total counter

jaeger_agent_thrift_udp_t_processor_handler_errors_total{model="jaeger",protocol="binary"} 0

jaeger_agent_thrift_udp_t_processor_handler_errors_total{model="jaeger",protocol="compact"} 0

jaeger_agent_thrift_udp_t_processor_handler_errors_total{model="zipkin",protocol="compact"} 0

# HELP process_cpu_seconds_total Total user and system CPU time spent in seconds.

# TYPE process_cpu_seconds_total counter

process_cpu_seconds_total 0.05

# HELP process_max_fds Maximum number of open file descriptors.

# TYPE process_max_fds gauge

process_max_fds 1.048576e+06

# HELP process_open_fds Number of open file descriptors.

# TYPE process_open_fds gauge

process_open_fds 11

# HELP process_resident_memory_bytes Resident memory size in bytes.

# TYPE process_resident_memory_bytes gauge

process_resident_memory_bytes 1.3283328e+07

# HELP process_start_time_seconds Start time of the process since unix epoch in seconds.

# TYPE process_start_time_seconds gauge

process_start_time_seconds 1.55540751234e+09

# HELP process_virtual_memory_bytes Virtual memory size in bytes.

# TYPE process_virtual_memory_bytes gauge

process_virtual_memory_bytes 1.22187776e+08jaeger-collector metrics

# HELP go_gc_duration_seconds A summary of the GC invocation durations.

# TYPE go_gc_duration_seconds summary

...

# HELP jaeger_collector_batch_size batch-size

# TYPE jaeger_collector_batch_size gauge

jaeger_collector_batch_size{host="1bd33f303256"} 0

# HELP jaeger_collector_in_queue_latency in-queue-latency

# TYPE jaeger_collector_in_queue_latency histogram

jaeger_collector_in_queue_latency_bucket{host="1bd33f303256",le="0.005"} 0

jaeger_collector_in_queue_latency_bucket{host="1bd33f303256",le="0.01"} 0

jaeger_collector_in_queue_latency_bucket{host="1bd33f303256",le="0.025"} 0

jaeger_collector_in_queue_latency_bucket{host="1bd33f303256",le="0.05"} 0

jaeger_collector_in_queue_latency_bucket{host="1bd33f303256",le="0.1"} 0

jaeger_collector_in_queue_latency_bucket{host="1bd33f303256",le="0.25"} 0

jaeger_collector_in_queue_latency_bucket{host="1bd33f303256",le="0.5"} 0

jaeger_collector_in_queue_latency_bucket{host="1bd33f303256",le="1"} 0

jaeger_collector_in_queue_latency_bucket{host="1bd33f303256",le="2.5"} 0

jaeger_collector_in_queue_latency_bucket{host="1bd33f303256",le="5"} 0

jaeger_collector_in_queue_latency_bucket{host="1bd33f303256",le="10"} 0

jaeger_collector_in_queue_latency_bucket{host="1bd33f303256",le="+Inf"} 0

jaeger_collector_in_queue_latency_sum{host="1bd33f303256"} 0

jaeger_collector_in_queue_latency_count{host="1bd33f303256"} 0

# HELP jaeger_collector_queue_length queue-length

# TYPE jaeger_collector_queue_length gauge

jaeger_collector_queue_length{host="1bd33f303256"} 0

# HELP jaeger_collector_save_latency save-latency

# TYPE jaeger_collector_save_latency histogram

jaeger_collector_save_latency_bucket{host="1bd33f303256",le="0.005"} 0

jaeger_collector_save_latency_bucket{host="1bd33f303256",le="0.01"} 0

jaeger_collector_save_latency_bucket{host="1bd33f303256",le="0.025"} 0

jaeger_collector_save_latency_bucket{host="1bd33f303256",le="0.05"} 0

jaeger_collector_save_latency_bucket{host="1bd33f303256",le="0.1"} 0

jaeger_collector_save_latency_bucket{host="1bd33f303256",le="0.25"} 0

jaeger_collector_save_latency_bucket{host="1bd33f303256",le="0.5"} 0

jaeger_collector_save_latency_bucket{host="1bd33f303256",le="1"} 0

jaeger_collector_save_latency_bucket{host="1bd33f303256",le="2.5"} 0

jaeger_collector_save_latency_bucket{host="1bd33f303256",le="5"} 0

jaeger_collector_save_latency_bucket{host="1bd33f303256",le="10"} 0

jaeger_collector_save_latency_bucket{host="1bd33f303256",le="+Inf"} 0

jaeger_collector_save_latency_sum{host="1bd33f303256"} 0

jaeger_collector_save_latency_count{host="1bd33f303256"} 0

# HELP jaeger_collector_spans_dropped_total spans.dropped

# TYPE jaeger_collector_spans_dropped_total counter

jaeger_collector_spans_dropped_total{host="1bd33f303256"} 0

# HELP jaeger_collector_spans_received_total received

# TYPE jaeger_collector_spans_received_total counter

jaeger_collector_spans_received_total{debug="false",format="jaeger",svc="other-services"} 0

jaeger_collector_spans_received_total{debug="false",format="unknown",svc="other-services"} 0

jaeger_collector_spans_received_total{debug="false",format="zipkin",svc="other-services"} 0

jaeger_collector_spans_received_total{debug="true",format="jaeger",svc="other-services"} 0

jaeger_collector_spans_received_total{debug="true",format="unknown",svc="other-services"} 0

jaeger_collector_spans_received_total{debug="true",format="zipkin",svc="other-services"} 0

# HELP jaeger_collector_spans_rejected_total rejected

# TYPE jaeger_collector_spans_rejected_total counter

jaeger_collector_spans_rejected_total{debug="false",format="jaeger",svc="other-services"} 0

jaeger_collector_spans_rejected_total{debug="false",format="unknown",svc="other-services"} 0

jaeger_collector_spans_rejected_total{debug="false",format="zipkin",svc="other-services"} 0

jaeger_collector_spans_rejected_total{debug="true",format="jaeger",svc="other-services"} 0

jaeger_collector_spans_rejected_total{debug="true",format="unknown",svc="other-services"} 0

jaeger_collector_spans_rejected_total{debug="true",format="zipkin",svc="other-services"} 0

# HELP jaeger_collector_spans_saved_by_svc_total saved-by-svc

# TYPE jaeger_collector_spans_saved_by_svc_total counter

jaeger_collector_spans_saved_by_svc_total{debug="false",result="err",svc="other-services"} 0

jaeger_collector_spans_saved_by_svc_total{debug="false",result="ok",svc="other-services"} 0

jaeger_collector_spans_saved_by_svc_total{debug="true",result="err",svc="other-services"} 0

jaeger_collector_spans_saved_by_svc_total{debug="true",result="ok",svc="other-services"} 0

# HELP jaeger_collector_spans_serviceNames spans.serviceNames

# TYPE jaeger_collector_spans_serviceNames gauge

jaeger_collector_spans_serviceNames{host="1bd33f303256"} 0

# HELP jaeger_collector_traces_received_total received

# TYPE jaeger_collector_traces_received_total counter

jaeger_collector_traces_received_total{debug="false",format="jaeger",svc="other-services"} 0

jaeger_collector_traces_received_total{debug="false",format="unknown",svc="other-services"} 0

jaeger_collector_traces_received_total{debug="false",format="zipkin",svc="other-services"} 0

jaeger_collector_traces_received_total{debug="true",format="jaeger",svc="other-services"} 0

jaeger_collector_traces_received_total{debug="true",format="unknown",svc="other-services"} 0

jaeger_collector_traces_received_total{debug="true",format="zipkin",svc="other-services"} 0

# HELP jaeger_collector_traces_rejected_total rejected

# TYPE jaeger_collector_traces_rejected_total counter

jaeger_collector_traces_rejected_total{debug="false",format="jaeger",svc="other-services"} 0

jaeger_collector_traces_rejected_total{debug="false",format="unknown",svc="other-services"} 0

jaeger_collector_traces_rejected_total{debug="false",format="zipkin",svc="other-services"} 0

jaeger_collector_traces_rejected_total{debug="true",format="jaeger",svc="other-services"} 0

jaeger_collector_traces_rejected_total{debug="true",format="unknown",svc="other-services"} 0

jaeger_collector_traces_rejected_total{debug="true",format="zipkin",svc="other-services"} 0

# HELP jaeger_collector_traces_saved_by_svc_total saved-by-svc

# TYPE jaeger_collector_traces_saved_by_svc_total counter

jaeger_collector_traces_saved_by_svc_total{debug="false",result="err",svc="other-services"} 0

jaeger_collector_traces_saved_by_svc_total{debug="false",result="ok",svc="other-services"} 0

jaeger_collector_traces_saved_by_svc_total{debug="true",result="err",svc="other-services"} 0

jaeger_collector_traces_saved_by_svc_total{debug="true",result="ok",svc="other-services"} 0

# HELP process_cpu_seconds_total Total user and system CPU time spent in seconds.

# TYPE process_cpu_seconds_total counter

process_cpu_seconds_total 0.71

# HELP process_max_fds Maximum number of open file descriptors.

# TYPE process_max_fds gauge

process_max_fds 1.048576e+06

# HELP process_open_fds Number of open file descriptors.

# TYPE process_open_fds gauge

process_open_fds 11

# HELP process_resident_memory_bytes Resident memory size in bytes.

# TYPE process_resident_memory_bytes gauge

process_resident_memory_bytes 1.9329024e+07

# HELP process_start_time_seconds Start time of the process since unix epoch in seconds.

# TYPE process_start_time_seconds gauge

process_start_time_seconds 1.55540675992e+09

# HELP process_virtual_memory_bytes Virtual memory size in bytes.

# TYPE process_virtual_memory_bytes gauge

process_virtual_memory_bytes 1.3008896e+08jaeger-query metrics

# HELP go_gc_duration_seconds A summary of the GC invocation durations.

# TYPE go_gc_duration_seconds summary

...

# HELP jaeger_query_latency latency

# TYPE jaeger_query_latency histogram

jaeger_query_latency_bucket{operation="find_trace_ids",result="err",le="0.005"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="err",le="0.01"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="err",le="0.025"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="err",le="0.05"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="err",le="0.1"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="err",le="0.25"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="err",le="0.5"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="err",le="1"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="err",le="2.5"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="err",le="5"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="err",le="10"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="err",le="+Inf"} 0

jaeger_query_latency_sum{operation="find_trace_ids",result="err"} 0

jaeger_query_latency_count{operation="find_trace_ids",result="err"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="ok",le="0.005"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="ok",le="0.01"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="ok",le="0.025"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="ok",le="0.05"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="ok",le="0.1"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="ok",le="0.25"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="ok",le="0.5"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="ok",le="1"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="ok",le="2.5"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="ok",le="5"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="ok",le="10"} 0

jaeger_query_latency_bucket{operation="find_trace_ids",result="ok",le="+Inf"} 0

jaeger_query_latency_sum{operation="find_trace_ids",result="ok"} 0

jaeger_query_latency_count{operation="find_trace_ids",result="ok"} 0

jaeger_query_latency_bucket{operation="find_traces",result="err",le="0.005"} 0

jaeger_query_latency_bucket{operation="find_traces",result="err",le="0.01"} 0

jaeger_query_latency_bucket{operation="find_traces",result="err",le="0.025"} 0

jaeger_query_latency_bucket{operation="find_traces",result="err",le="0.05"} 0

jaeger_query_latency_bucket{operation="find_traces",result="err",le="0.1"} 0

jaeger_query_latency_bucket{operation="find_traces",result="err",le="0.25"} 0

jaeger_query_latency_bucket{operation="find_traces",result="err",le="0.5"} 0

jaeger_query_latency_bucket{operation="find_traces",result="err",le="1"} 0

jaeger_query_latency_bucket{operation="find_traces",result="err",le="2.5"} 0

jaeger_query_latency_bucket{operation="find_traces",result="err",le="5"} 0

jaeger_query_latency_bucket{operation="find_traces",result="err",le="10"} 0

jaeger_query_latency_bucket{operation="find_traces",result="err",le="+Inf"} 0

jaeger_query_latency_sum{operation="find_traces",result="err"} 0

jaeger_query_latency_count{operation="find_traces",result="err"} 0

jaeger_query_latency_bucket{operation="find_traces",result="ok",le="0.005"} 0

jaeger_query_latency_bucket{operation="find_traces",result="ok",le="0.01"} 0

jaeger_query_latency_bucket{operation="find_traces",result="ok",le="0.025"} 0

jaeger_query_latency_bucket{operation="find_traces",result="ok",le="0.05"} 0

jaeger_query_latency_bucket{operation="find_traces",result="ok",le="0.1"} 0

jaeger_query_latency_bucket{operation="find_traces",result="ok",le="0.25"} 0

jaeger_query_latency_bucket{operation="find_traces",result="ok",le="0.5"} 0

jaeger_query_latency_bucket{operation="find_traces",result="ok",le="1"} 0

jaeger_query_latency_bucket{operation="find_traces",result="ok",le="2.5"} 0

jaeger_query_latency_bucket{operation="find_traces",result="ok",le="5"} 0

jaeger_query_latency_bucket{operation="find_traces",result="ok",le="10"} 0

jaeger_query_latency_bucket{operation="find_traces",result="ok",le="+Inf"} 0

jaeger_query_latency_sum{operation="find_traces",result="ok"} 0

jaeger_query_latency_count{operation="find_traces",result="ok"} 0

jaeger_query_latency_bucket{operation="get_operations",result="err",le="0.005"} 0

jaeger_query_latency_bucket{operation="get_operations",result="err",le="0.01"} 0

jaeger_query_latency_bucket{operation="get_operations",result="err",le="0.025"} 0

jaeger_query_latency_bucket{operation="get_operations",result="err",le="0.05"} 0

jaeger_query_latency_bucket{operation="get_operations",result="err",le="0.1"} 0

jaeger_query_latency_bucket{operation="get_operations",result="err",le="0.25"} 0

jaeger_query_latency_bucket{operation="get_operations",result="err",le="0.5"} 0

jaeger_query_latency_bucket{operation="get_operations",result="err",le="1"} 0

jaeger_query_latency_bucket{operation="get_operations",result="err",le="2.5"} 0

jaeger_query_latency_bucket{operation="get_operations",result="err",le="5"} 0

jaeger_query_latency_bucket{operation="get_operations",result="err",le="10"} 0

jaeger_query_latency_bucket{operation="get_operations",result="err",le="+Inf"} 0

jaeger_query_latency_sum{operation="get_operations",result="err"} 0

jaeger_query_latency_count{operation="get_operations",result="err"} 0

jaeger_query_latency_bucket{operation="get_operations",result="ok",le="0.005"} 0

jaeger_query_latency_bucket{operation="get_operations",result="ok",le="0.01"} 0

jaeger_query_latency_bucket{operation="get_operations",result="ok",le="0.025"} 0

jaeger_query_latency_bucket{operation="get_operations",result="ok",le="0.05"} 0

jaeger_query_latency_bucket{operation="get_operations",result="ok",le="0.1"} 0

jaeger_query_latency_bucket{operation="get_operations",result="ok",le="0.25"} 0

jaeger_query_latency_bucket{operation="get_operations",result="ok",le="0.5"} 0

jaeger_query_latency_bucket{operation="get_operations",result="ok",le="1"} 0

jaeger_query_latency_bucket{operation="get_operations",result="ok",le="2.5"} 0

jaeger_query_latency_bucket{operation="get_operations",result="ok",le="5"} 0

jaeger_query_latency_bucket{operation="get_operations",result="ok",le="10"} 0

jaeger_query_latency_bucket{operation="get_operations",result="ok",le="+Inf"} 0

jaeger_query_latency_sum{operation="get_operations",result="ok"} 0

jaeger_query_latency_count{operation="get_operations",result="ok"} 0

jaeger_query_latency_bucket{operation="get_services",result="err",le="0.005"} 0

jaeger_query_latency_bucket{operation="get_services",result="err",le="0.01"} 0

jaeger_query_latency_bucket{operation="get_services",result="err",le="0.025"} 0

jaeger_query_latency_bucket{operation="get_services",result="err",le="0.05"} 0

jaeger_query_latency_bucket{operation="get_services",result="err",le="0.1"} 0

jaeger_query_latency_bucket{operation="get_services",result="err",le="0.25"} 0

jaeger_query_latency_bucket{operation="get_services",result="err",le="0.5"} 0

jaeger_query_latency_bucket{operation="get_services",result="err",le="1"} 0

jaeger_query_latency_bucket{operation="get_services",result="err",le="2.5"} 0

jaeger_query_latency_bucket{operation="get_services",result="err",le="5"} 0

jaeger_query_latency_bucket{operation="get_services",result="err",le="10"} 0

jaeger_query_latency_bucket{operation="get_services",result="err",le="+Inf"} 0

jaeger_query_latency_sum{operation="get_services",result="err"} 0

jaeger_query_latency_count{operation="get_services",result="err"} 0

jaeger_query_latency_bucket{operation="get_services",result="ok",le="0.005"} 0

jaeger_query_latency_bucket{operation="get_services",result="ok",le="0.01"} 0

jaeger_query_latency_bucket{operation="get_services",result="ok",le="0.025"} 0

jaeger_query_latency_bucket{operation="get_services",result="ok",le="0.05"} 0

jaeger_query_latency_bucket{operation="get_services",result="ok",le="0.1"} 0

jaeger_query_latency_bucket{operation="get_services",result="ok",le="0.25"} 0

jaeger_query_latency_bucket{operation="get_services",result="ok",le="0.5"} 0

jaeger_query_latency_bucket{operation="get_services",result="ok",le="1"} 0

jaeger_query_latency_bucket{operation="get_services",result="ok",le="2.5"} 0

jaeger_query_latency_bucket{operation="get_services",result="ok",le="5"} 0

jaeger_query_latency_bucket{operation="get_services",result="ok",le="10"} 0

jaeger_query_latency_bucket{operation="get_services",result="ok",le="+Inf"} 0

jaeger_query_latency_sum{operation="get_services",result="ok"} 0

jaeger_query_latency_count{operation="get_services",result="ok"} 0

jaeger_query_latency_bucket{operation="get_trace",result="err",le="0.005"} 0

jaeger_query_latency_bucket{operation="get_trace",result="err",le="0.01"} 0

jaeger_query_latency_bucket{operation="get_trace",result="err",le="0.025"} 0

jaeger_query_latency_bucket{operation="get_trace",result="err",le="0.05"} 0

jaeger_query_latency_bucket{operation="get_trace",result="err",le="0.1"} 0

jaeger_query_latency_bucket{operation="get_trace",result="err",le="0.25"} 0

jaeger_query_latency_bucket{operation="get_trace",result="err",le="0.5"} 0

jaeger_query_latency_bucket{operation="get_trace",result="err",le="1"} 0

jaeger_query_latency_bucket{operation="get_trace",result="err",le="2.5"} 0

jaeger_query_latency_bucket{operation="get_trace",result="err",le="5"} 0

jaeger_query_latency_bucket{operation="get_trace",result="err",le="10"} 0

jaeger_query_latency_bucket{operation="get_trace",result="err",le="+Inf"} 0

jaeger_query_latency_sum{operation="get_trace",result="err"} 0

jaeger_query_latency_count{operation="get_trace",result="err"} 0

jaeger_query_latency_bucket{operation="get_trace",result="ok",le="0.005"} 0

jaeger_query_latency_bucket{operation="get_trace",result="ok",le="0.01"} 0

jaeger_query_latency_bucket{operation="get_trace",result="ok",le="0.025"} 0

jaeger_query_latency_bucket{operation="get_trace",result="ok",le="0.05"} 0

jaeger_query_latency_bucket{operation="get_trace",result="ok",le="0.1"} 0

jaeger_query_latency_bucket{operation="get_trace",result="ok",le="0.25"} 0

jaeger_query_latency_bucket{operation="get_trace",result="ok",le="0.5"} 0

jaeger_query_latency_bucket{operation="get_trace",result="ok",le="1"} 0

jaeger_query_latency_bucket{operation="get_trace",result="ok",le="2.5"} 0

jaeger_query_latency_bucket{operation="get_trace",result="ok",le="5"} 0

jaeger_query_latency_bucket{operation="get_trace",result="ok",le="10"} 0

jaeger_query_latency_bucket{operation="get_trace",result="ok",le="+Inf"} 0

jaeger_query_latency_sum{operation="get_trace",result="ok"} 0

jaeger_query_latency_count{operation="get_trace",result="ok"} 0

# HELP jaeger_query_requests_total requests

# TYPE jaeger_query_requests_total counter

jaeger_query_requests_total{operation="find_trace_ids",result="err"} 0

jaeger_query_requests_total{operation="find_trace_ids",result="ok"} 0

jaeger_query_requests_total{operation="find_traces",result="err"} 0

jaeger_query_requests_total{operation="find_traces",result="ok"} 0

jaeger_query_requests_total{operation="get_operations",result="err"} 0

jaeger_query_requests_total{operation="get_operations",result="ok"} 0

jaeger_query_requests_total{operation="get_services",result="err"} 0

jaeger_query_requests_total{operation="get_services",result="ok"} 0

jaeger_query_requests_total{operation="get_trace",result="err"} 0

jaeger_query_requests_total{operation="get_trace",result="ok"} 0

# HELP jaeger_query_responses responses

# TYPE jaeger_query_responses histogram

jaeger_query_responses_bucket{operation="find_trace_ids",le="0.005"} 0

jaeger_query_responses_bucket{operation="find_trace_ids",le="0.01"} 0

jaeger_query_responses_bucket{operation="find_trace_ids",le="0.025"} 0

jaeger_query_responses_bucket{operation="find_trace_ids",le="0.05"} 0

jaeger_query_responses_bucket{operation="find_trace_ids",le="0.1"} 0

jaeger_query_responses_bucket{operation="find_trace_ids",le="0.25"} 0

jaeger_query_responses_bucket{operation="find_trace_ids",le="0.5"} 0

jaeger_query_responses_bucket{operation="find_trace_ids",le="1"} 0

jaeger_query_responses_bucket{operation="find_trace_ids",le="2.5"} 0

jaeger_query_responses_bucket{operation="find_trace_ids",le="5"} 0

jaeger_query_responses_bucket{operation="find_trace_ids",le="10"} 0

jaeger_query_responses_bucket{operation="find_trace_ids",le="+Inf"} 0

jaeger_query_responses_sum{operation="find_trace_ids"} 0

jaeger_query_responses_count{operation="find_trace_ids"} 0

jaeger_query_responses_bucket{operation="find_traces",le="0.005"} 0

jaeger_query_responses_bucket{operation="find_traces",le="0.01"} 0

jaeger_query_responses_bucket{operation="find_traces",le="0.025"} 0

jaeger_query_responses_bucket{operation="find_traces",le="0.05"} 0

jaeger_query_responses_bucket{operation="find_traces",le="0.1"} 0

jaeger_query_responses_bucket{operation="find_traces",le="0.25"} 0

jaeger_query_responses_bucket{operation="find_traces",le="0.5"} 0

jaeger_query_responses_bucket{operation="find_traces",le="1"} 0

jaeger_query_responses_bucket{operation="find_traces",le="2.5"} 0

jaeger_query_responses_bucket{operation="find_traces",le="5"} 0

jaeger_query_responses_bucket{operation="find_traces",le="10"} 0

jaeger_query_responses_bucket{operation="find_traces",le="+Inf"} 0

jaeger_query_responses_sum{operation="find_traces"} 0

jaeger_query_responses_count{operation="find_traces"} 0

jaeger_query_responses_bucket{operation="get_operations",le="0.005"} 0

jaeger_query_responses_bucket{operation="get_operations",le="0.01"} 0

jaeger_query_responses_bucket{operation="get_operations",le="0.025"} 0

jaeger_query_responses_bucket{operation="get_operations",le="0.05"} 0

jaeger_query_responses_bucket{operation="get_operations",le="0.1"} 0

jaeger_query_responses_bucket{operation="get_operations",le="0.25"} 0

jaeger_query_responses_bucket{operation="get_operations",le="0.5"} 0

jaeger_query_responses_bucket{operation="get_operations",le="1"} 0

jaeger_query_responses_bucket{operation="get_operations",le="2.5"} 0

jaeger_query_responses_bucket{operation="get_operations",le="5"} 0

jaeger_query_responses_bucket{operation="get_operations",le="10"} 0

jaeger_query_responses_bucket{operation="get_operations",le="+Inf"} 0

jaeger_query_responses_sum{operation="get_operations"} 0

jaeger_query_responses_count{operation="get_operations"} 0

jaeger_query_responses_bucket{operation="get_services",le="0.005"} 0

jaeger_query_responses_bucket{operation="get_services",le="0.01"} 0

jaeger_query_responses_bucket{operation="get_services",le="0.025"} 0

jaeger_query_responses_bucket{operation="get_services",le="0.05"} 0

jaeger_query_responses_bucket{operation="get_services",le="0.1"} 0

jaeger_query_responses_bucket{operation="get_services",le="0.25"} 0

jaeger_query_responses_bucket{operation="get_services",le="0.5"} 0

jaeger_query_responses_bucket{operation="get_services",le="1"} 0

jaeger_query_responses_bucket{operation="get_services",le="2.5"} 0

jaeger_query_responses_bucket{operation="get_services",le="5"} 0

jaeger_query_responses_bucket{operation="get_services",le="10"} 0

jaeger_query_responses_bucket{operation="get_services",le="+Inf"} 0

jaeger_query_responses_sum{operation="get_services"} 0

jaeger_query_responses_count{operation="get_services"} 0

jaeger_query_responses_bucket{operation="get_trace",le="0.005"} 0

jaeger_query_responses_bucket{operation="get_trace",le="0.01"} 0

jaeger_query_responses_bucket{operation="get_trace",le="0.025"} 0

jaeger_query_responses_bucket{operation="get_trace",le="0.05"} 0

jaeger_query_responses_bucket{operation="get_trace",le="0.1"} 0

jaeger_query_responses_bucket{operation="get_trace",le="0.25"} 0

jaeger_query_responses_bucket{operation="get_trace",le="0.5"} 0

jaeger_query_responses_bucket{operation="get_trace",le="1"} 0

jaeger_query_responses_bucket{operation="get_trace",le="2.5"} 0

jaeger_query_responses_bucket{operation="get_trace",le="5"} 0

jaeger_query_responses_bucket{operation="get_trace",le="10"} 0

jaeger_query_responses_bucket{operation="get_trace",le="+Inf"} 0

jaeger_query_responses_sum{operation="get_trace"} 0

jaeger_query_responses_count{operation="get_trace"} 0

# HELP jaeger_tracer_baggage_restrictions_updates_total Number of times baggage restrictions were successfully updated

# TYPE jaeger_tracer_baggage_restrictions_updates_total counter

jaeger_tracer_baggage_restrictions_updates_total{result="err"} 0

jaeger_tracer_baggage_restrictions_updates_total{result="ok"} 0

# HELP jaeger_tracer_baggage_truncations_total Number of times baggage was truncated as per baggage restrictions

# TYPE jaeger_tracer_baggage_truncations_total counter

jaeger_tracer_baggage_truncations_total 0

# HELP jaeger_tracer_baggage_updates_total Number of times baggage was successfully written or updated on spans

# TYPE jaeger_tracer_baggage_updates_total counter

jaeger_tracer_baggage_updates_total{result="err"} 0

jaeger_tracer_baggage_updates_total{result="ok"} 0

# HELP jaeger_tracer_finished_spans_total Number of spans finished by this tracer

# TYPE jaeger_tracer_finished_spans_total counter

jaeger_tracer_finished_spans_total 0

# HELP jaeger_tracer_reporter_queue_length Current number of spans in the reporter queue

# TYPE jaeger_tracer_reporter_queue_length gauge

jaeger_tracer_reporter_queue_length 0

# HELP jaeger_tracer_reporter_spans_total Number of spans successfully reported

# TYPE jaeger_tracer_reporter_spans_total counter

jaeger_tracer_reporter_spans_total{result="dropped"} 0

jaeger_tracer_reporter_spans_total{result="err"} 0

jaeger_tracer_reporter_spans_total{result="ok"} 0

# HELP jaeger_tracer_sampler_queries_total Number of times the Sampler succeeded to retrieve sampling strategy

# TYPE jaeger_tracer_sampler_queries_total counter

jaeger_tracer_sampler_queries_total{result="err"} 0

jaeger_tracer_sampler_queries_total{result="ok"} 0

# HELP jaeger_tracer_sampler_updates_total Number of times the Sampler succeeded to retrieve and update sampling strategy

# TYPE jaeger_tracer_sampler_updates_total counter

jaeger_tracer_sampler_updates_total{result="err"} 0

jaeger_tracer_sampler_updates_total{result="ok"} 0

# HELP jaeger_tracer_span_context_decoding_errors_total Number of errors decoding tracing context

# TYPE jaeger_tracer_span_context_decoding_errors_total counter

jaeger_tracer_span_context_decoding_errors_total 0

# HELP jaeger_tracer_started_spans_total Number of sampled spans started by this tracer

# TYPE jaeger_tracer_started_spans_total counter

jaeger_tracer_started_spans_total{sampled="n"} 0

jaeger_tracer_started_spans_total{sampled="y"} 0

# HELP jaeger_tracer_throttled_debug_spans_total Number of times debug spans were throttled

# TYPE jaeger_tracer_throttled_debug_spans_total counter

jaeger_tracer_throttled_debug_spans_total 0

# HELP jaeger_tracer_throttler_updates_total Number of times throttler successfully updated

# TYPE jaeger_tracer_throttler_updates_total counter

jaeger_tracer_throttler_updates_total{result="err"} 0

jaeger_tracer_throttler_updates_total{result="ok"} 0

# HELP jaeger_tracer_traces_total Number of traces started by this tracer as sampled

# TYPE jaeger_tracer_traces_total counter

jaeger_tracer_traces_total{sampled="n",state="joined"} 0

jaeger_tracer_traces_total{sampled="n",state="started"} 0

jaeger_tracer_traces_total{sampled="y",state="joined"} 0

jaeger_tracer_traces_total{sampled="y",state="started"} 0

# HELP process_cpu_seconds_total Total user and system CPU time spent in seconds.

# TYPE process_cpu_seconds_total counter

process_cpu_seconds_total 0.09

# HELP process_max_fds Maximum number of open file descriptors.

# TYPE process_max_fds gauge

process_max_fds 1.048576e+06

# HELP process_open_fds Number of open file descriptors.

# TYPE process_open_fds gauge

process_open_fds 9

# HELP process_resident_memory_bytes Resident memory size in bytes.

# TYPE process_resident_memory_bytes gauge

process_resident_memory_bytes 1.4475264e+07

# HELP process_start_time_seconds Start time of the process since unix epoch in seconds.

# TYPE process_start_time_seconds gauge

process_start_time_seconds 1.55540951442e+09

# HELP process_virtual_memory_bytes Virtual memory size in bytes.

# TYPE process_virtual_memory_bytes gauge

process_virtual_memory_bytes 1.31395584e+08EOF